MITRE ATT&CK is perhaps a cyber security industry buzzword at this point, becoming just another feature included in RFPs as a prerequisite for products to have some mention of it included prior to purchase. The status of being overused is at least well deserved because of its usefulness that can be applied in many different situations when working between assessment teams and defenders.

Over the past years, I’ve been able to introduce and help a number of blue teams with MITRE ATT&CK. A common response when I ask people if they have any experience working with the ATT&CK framework is that they are aware of it, but have limited knowledge working with it and wouldn’t know where to begin utilizing it. Here, I’ll provide an overview of my methodology as it is today and review some of the tools I utilize to give blue teams a kickstart in their programs.

This will be broken up into three parts covering visibility, prevention and detection.

Part One

Visibility

Dropping into any kind of environment, I believe it is best to evaluate where you would be able to detect an attack if it were to occur. Using the ATT&CK framework as a guide, it will read left to right across tactics that threat actors could use to get into an environment, execute malicious code, move across the environment, and ultimately accomplish a goal like running ransomware across a network. Each of those steps can present an opportunity to view what an attacker is doing, but you would need to understand what in your environment would generate that visibility.

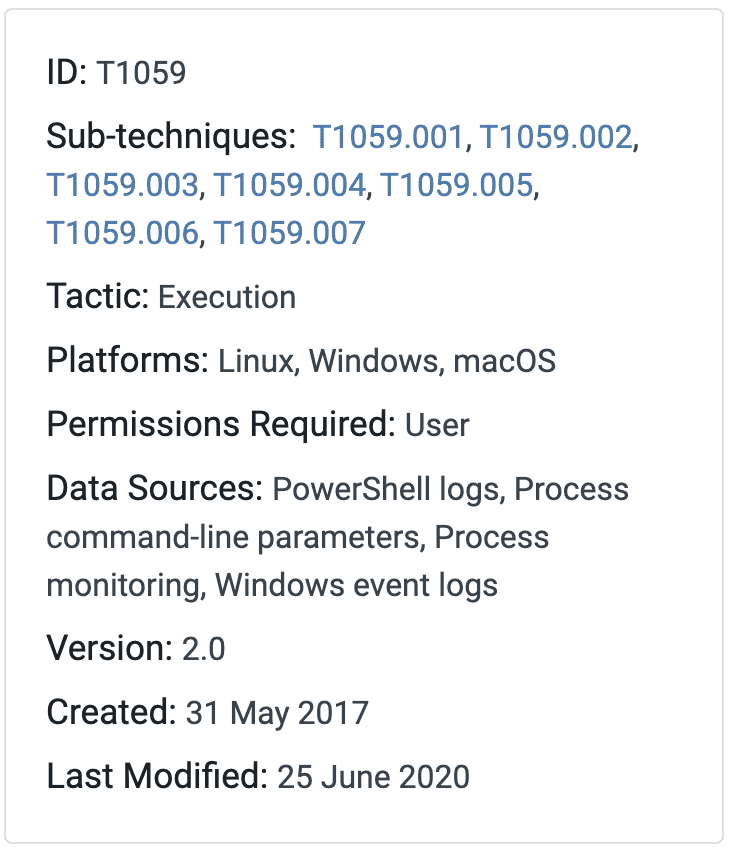

Each technique described in the ATT&CK framework provides recommended data sources to gather in order to detect an ATT&CK. What MITRE can’t do yet, but is getting better at, is prescribing what products or services have the capabilities to provide those sources of information. Products and services are always changing in their capabilities and it is difficult to keep an up-to-date, agnostic list of capabilities!

Before I go too much further, I should define that visibility, as it means to me, is data captured and centralized in a singular platform with a high degree of reliability for analysis. You cannot have visibility of an environment if you risk an actor wiping log entries or you need to have disparate internal groups pulling logs manually when an event is occurring. This foundational work needs to happen in order to provide visibility into attacks.

When I begin evaluating an environment, I take a high level sampling of major products and services. I try to identify first what endpoint, network, email, and identity protections are available and to what degree they are deployed. Depending on the product or service choices, they can often have a wide degree of variance in capabilities. Most commonly is the difference between a traditional Antivirus/Endpoint Protection Program (EPP) and a powerful Endpoint Detection and Response (EDR) solution. While Antivirus solutions provide great signals to direct an analyst towards where attacks could be occurring, the products depend on signatures to be updated at the speed in which attacks occur and generally provide limited amounts of detail to retroactively identify behaviors based on new evidence.

I begin to review the technologies, analyze their capabilities, and record what data sources they would contribute to the ATT&CK framework. This exercise is subjective, but is a great place to begin evaluating what techniques and tactics one has visibility of. I’ll continue with the example above of EPP vs EDR:

EPP example:

- name: Symantec Endpoint Protection

data_sources:

- Anti-virusEDR example:

- name: CrowdStrike

data_sources:

- Process monitoring

- File monitoring

- Process command-line parameters

- API monitoring

- Process use of network

- Windows Registry

- Authentication logsI utilize a structure similar to this in order to support two tools. The first is a tool I made, Data Source Map (dsmap), will help generate a file which can then be used by the second tool, DeTTECT.

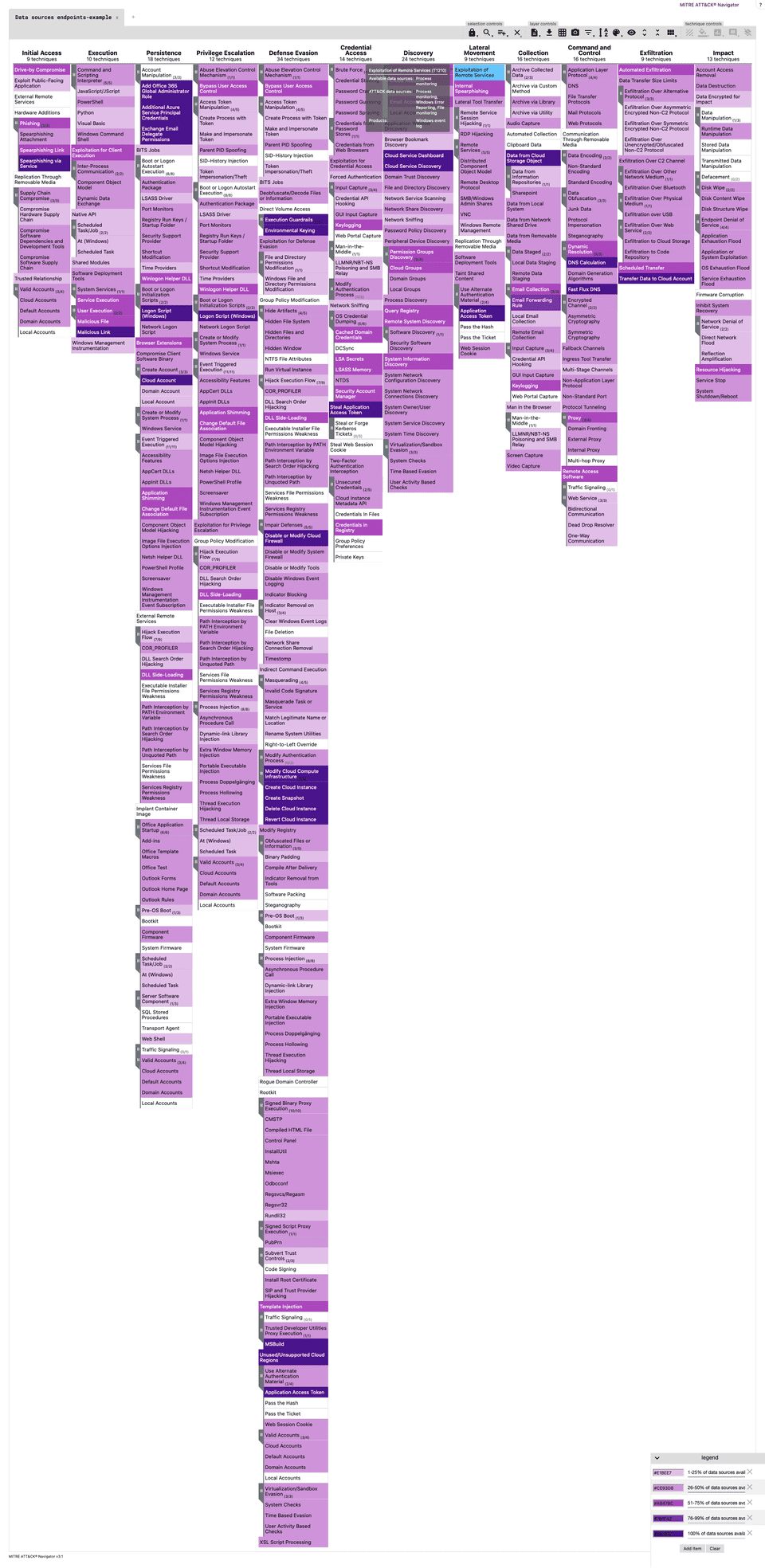

DeTTECT is an awesome project that helps score and track alignment to MITRE ATT&CK over time across a number of different use cases. Once we have our data source files created, we can run DeTTECT to create a visualization of what our data sources contribute to visibility across the framework. This visualization can be uploaded directly to the ATT&CK Navigator.

By hovering over any technique, DeTTECT gives information about what data sources are recommended to identify a technique in use, what data sources you have available, and what products contributed to those data sources. The more data sources available, the darker the coloring of the technique in the ATT&CK Navigator.

Our understanding of visibility is starting to really take shape now. Even with that, the ATT&CK framework is still large and open-ended. what should I prioritize in it?

If you don’t know what attacks your company would most commonly encounter and you do not have specialized threat modeling for your specific scenario, it makes sense to utilize industry research of the most common techniques and then work towards identifying your own threat scenarios. Some of my favorite reports:

- CrowdStrike Global Threat Report: https://www.crowdstrike.com/resources/reports/2020-crowdstrike-global-threat-report/

- Red Canary Threat Report: https://redcanary.com/blog/worms-dominate-2020-threat-detection-report/

Using these reports, you can identify the most common techniques that threat actors would utilize and compare that with what you have visibility for. Any gaps between gathering data sources for the most common techniques and what your security products and services produce should be closed quickly.

Since this has been in my drafts, two great new articles aligned with this topic have come out. Please check them out:

Defining ATT&CK Data Sources, Part I: Enhancing the Current State

Defining ATT&CK Data Sources, Part II: Operationalizing the Methodology